Method

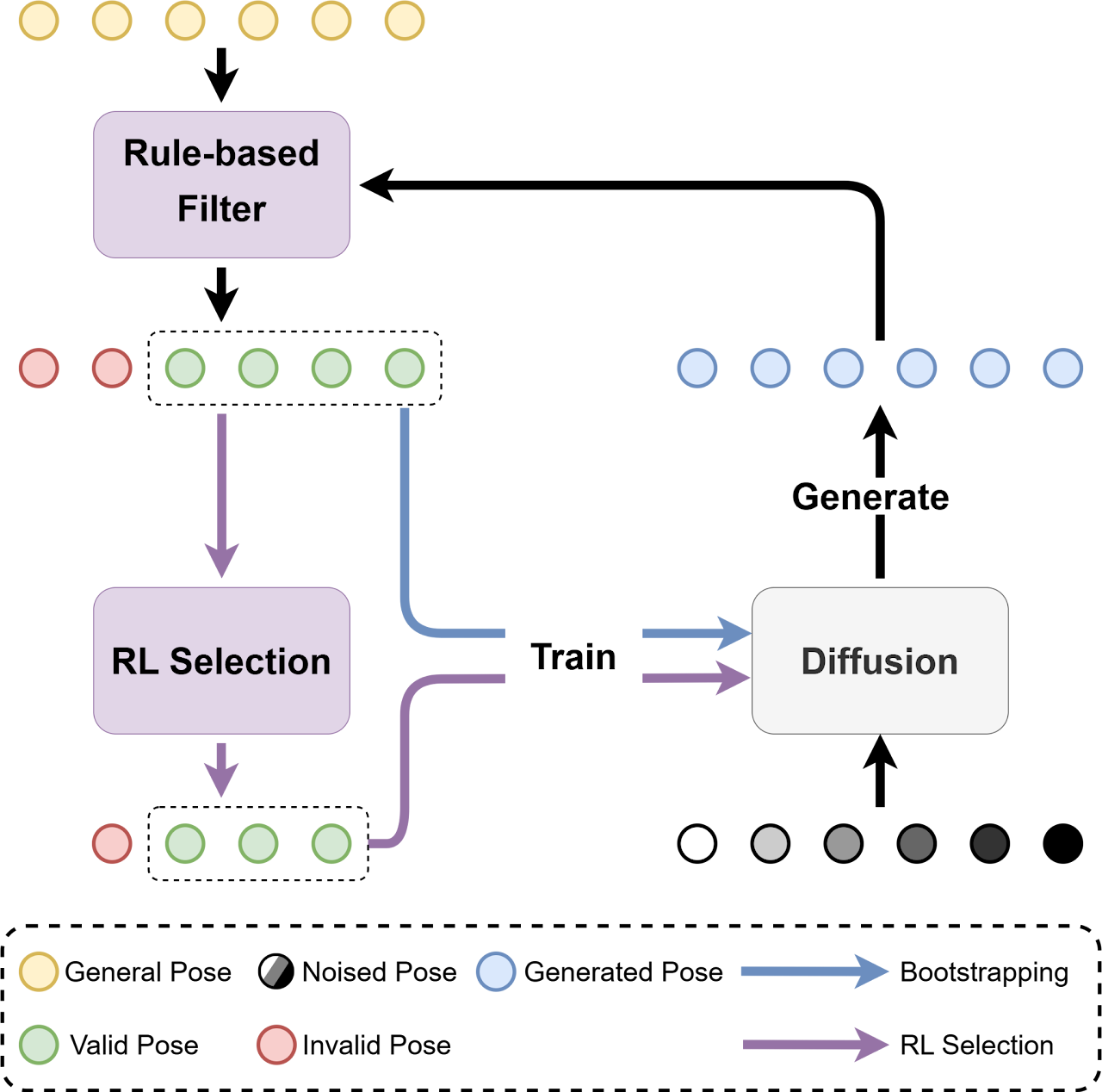

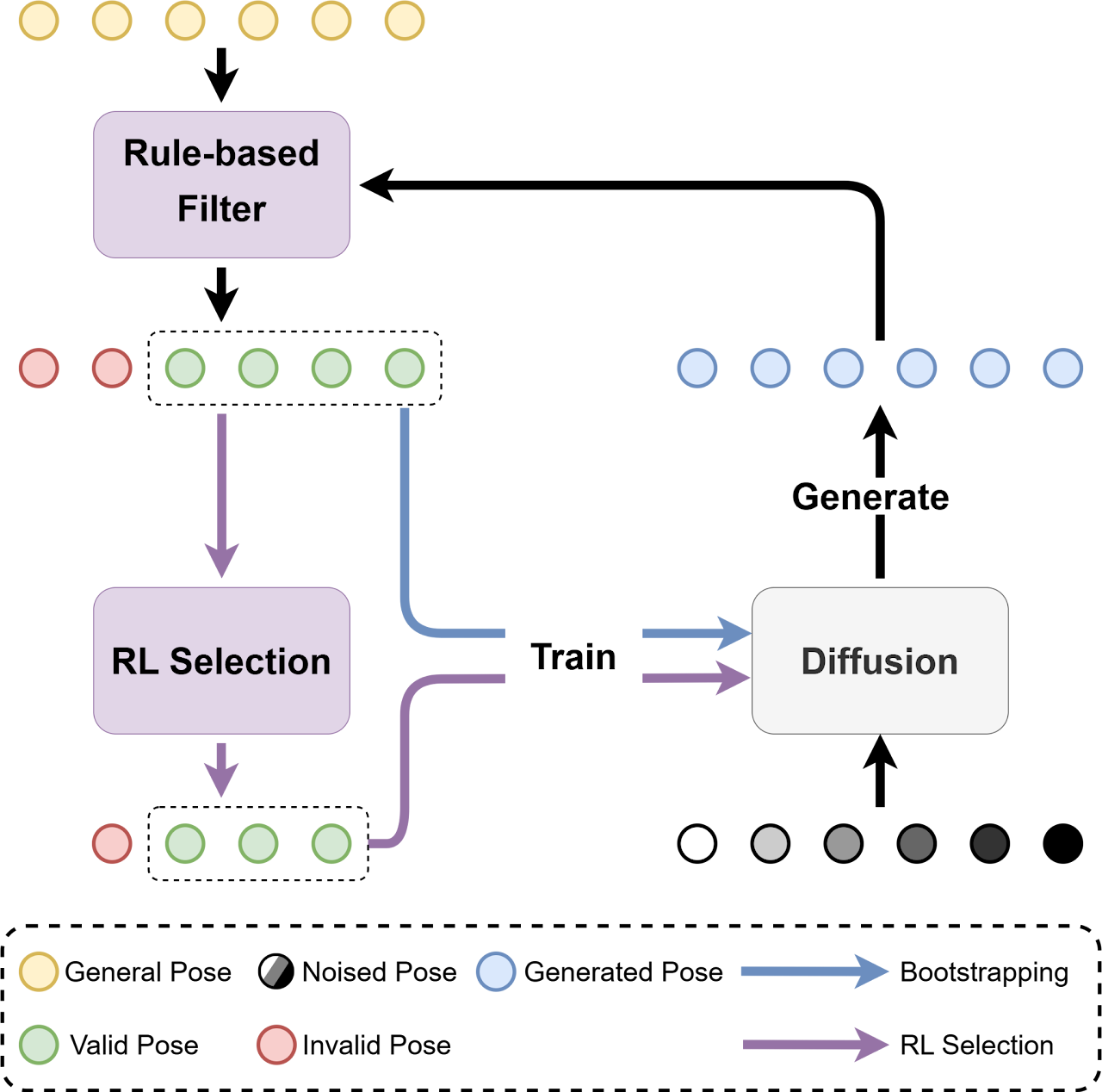

Data Generation

Qualitative Results

Stapler Clicking

Spray Bottle Pressing

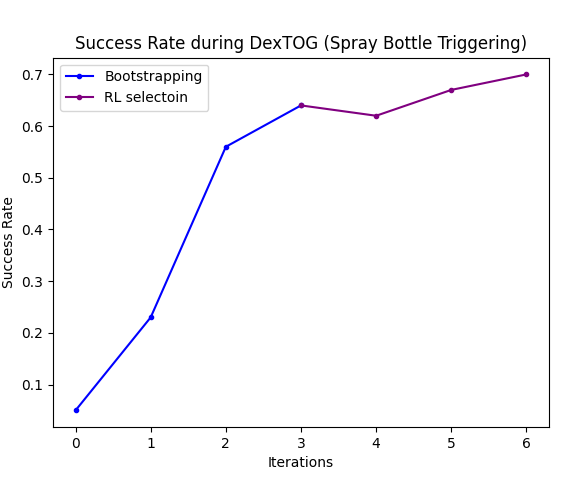

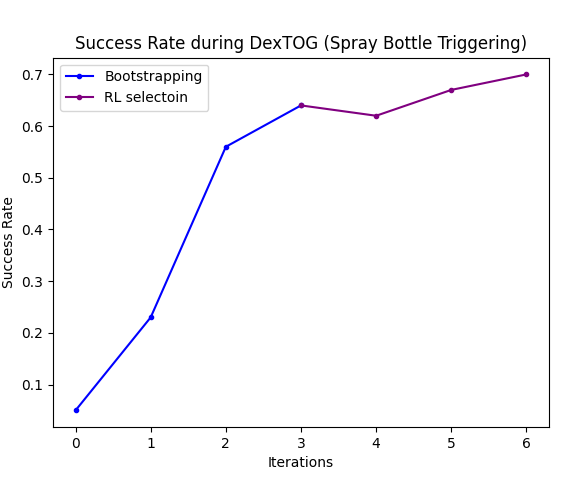

Spray Bottle Triggering

Bottle Cap Twisting

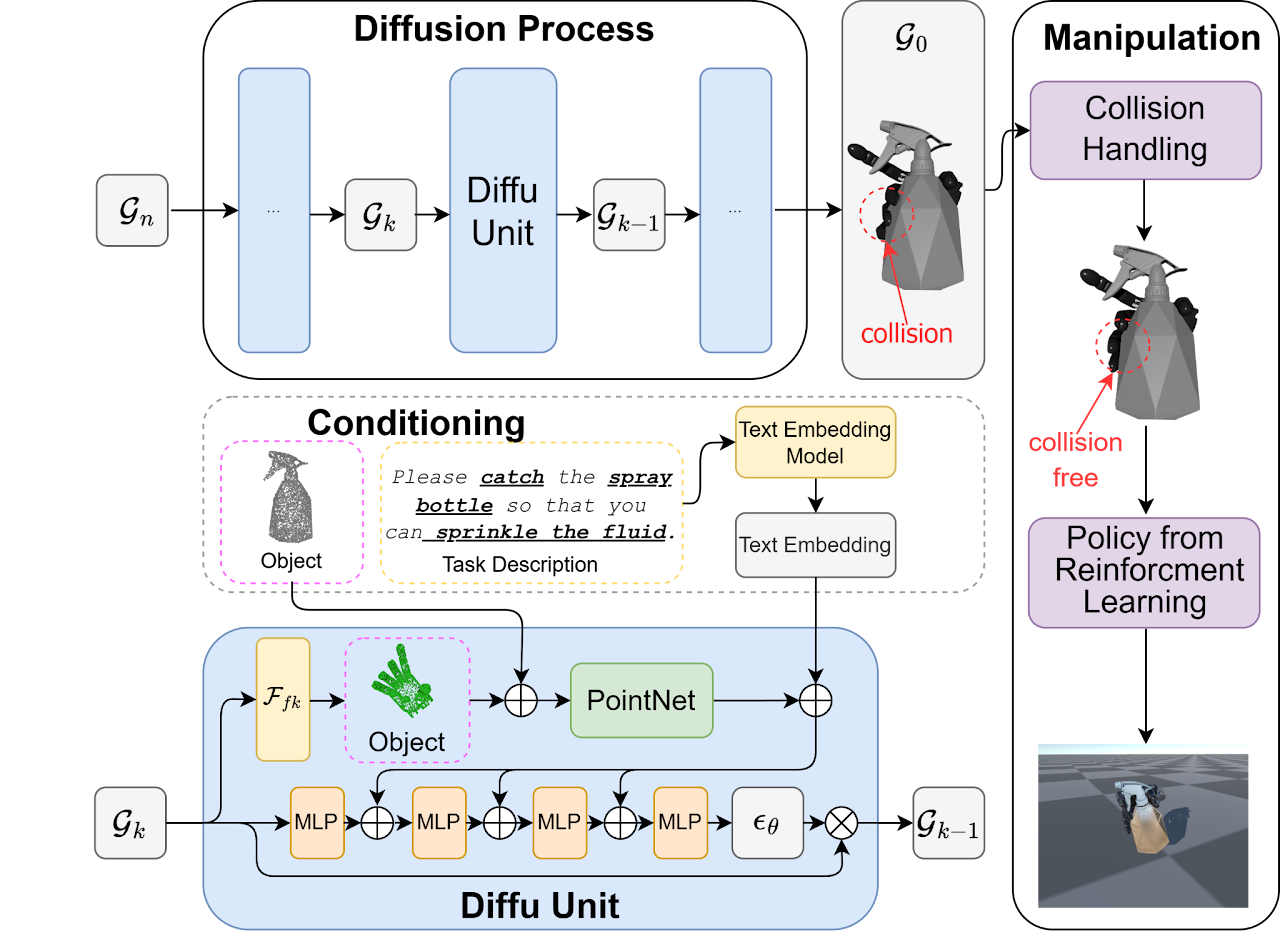

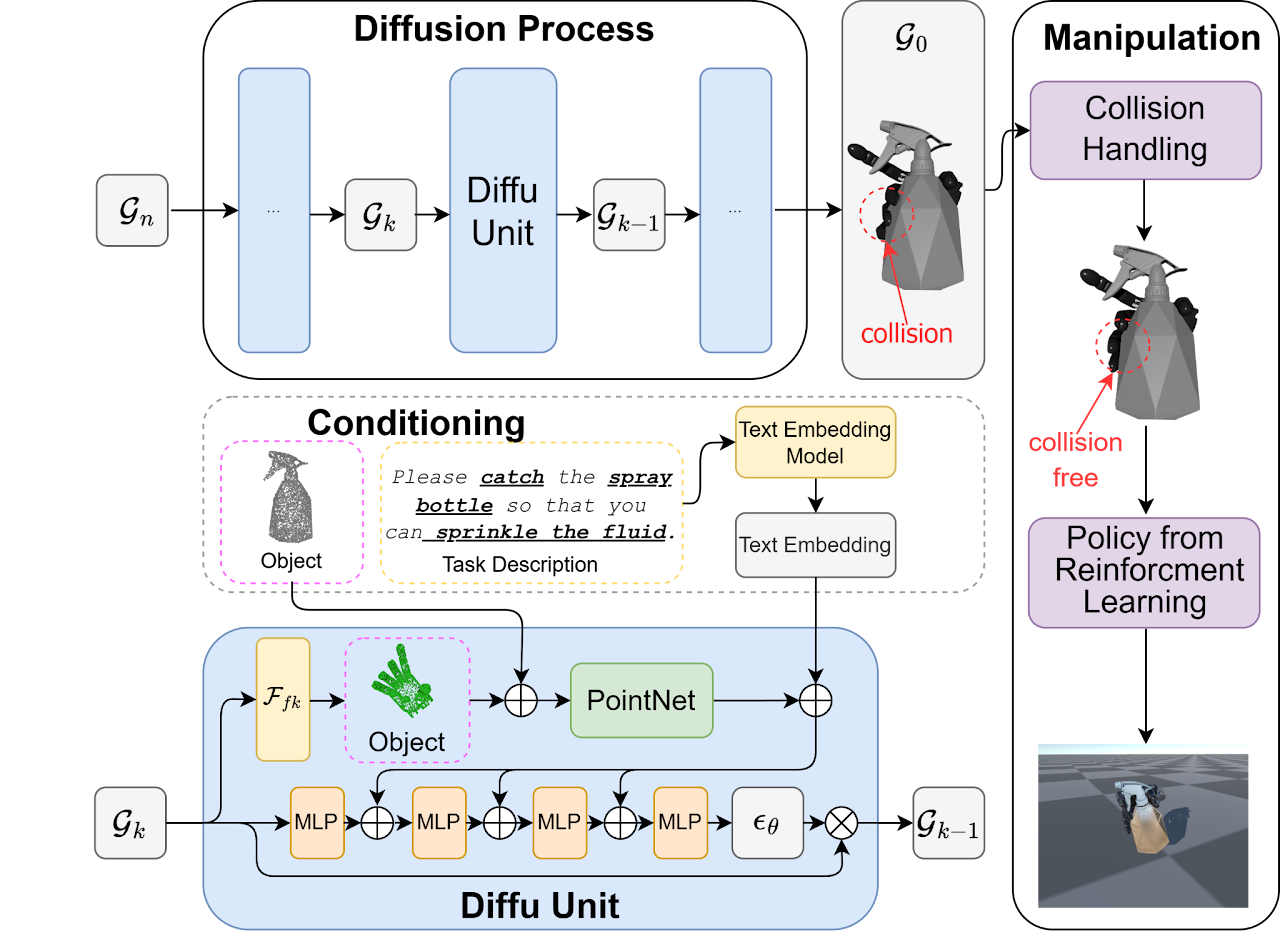

This study introduces a novel language-guided diffusion-based learning framework, DexTOG, aimed at advancing the field of task-oriented grasping (TOG) with dexterous hands. Unlike existing methods that mainly focus on 2-finger grippers, this research addresses the complexities of dexterous manipulation, where the system must identify non-unique optimal grasp poses under specific task constraints, cater to multiple valid grasps, and search in a high degree-of-freedom configuration space in grasp planning. The proposed DexTOG includes a diffusion-based grasp pose generation model, DexDiffu, and a data engine to support the DexDiffu. By leveraging DexTOG, we also proposed a new dataset, DexTOG-80K, which was developed using a shadow robot hand to perform various tasks on 80 objects from 5 categories, showcasing the dexterity and multi-tasking capabilities of the robotic hand. This research not only presents a significant leap in dexterous TOG but also provides a comprehensive dataset and simulation validation, setting a new benchmark in robotic manipulation research.

@ARTICLE{dextog,

author={Zhang, Jieyi and Xu, Wenqiang and Yu, Zhenjun and Xie, Pengfei and Tang, Tutian and Lu, Cewu},

journal={IEEE Robotics and Automation Letters},

title={DexTOG: Learning Task-Oriented Dexterous Grasp With Language Condition},

year={2025},

volume={10},

number={2},

pages={995-1002},

keywords={Grasping;Robots;Planning;Grippers;Vectors;Three-dimensional displays;Noise reduction;Engines;Noise;Diffusion processes;Deep learning in grasping and manipulation;dexterous manipulation},

doi={10.1109/LRA.2024.3518116}}